A recent conversation on Power BI touched on the subject of data modelling; namely, why would you model data for a wider business need than the report you happen to be building? What are the pros and cons of that approach, and which one outweighs the other? In true analytics terms, ‘data modelling’ is also known as ‘dimensional modelling’, but during this blog, ‘data modelling’ is the term we will use.

To answer these questions, you first have to talk about what data modelling is and how Power BI can handle it.

Power BI has a phenomenal capability when it comes to transforming data, which sees it rightly in the top spot for Analytics toolsets with organisations such as Gartner. If you look back 15 years or so, that same transformational capability would be an intensively technical process, involving a longer project design phase and probably a larger development team. Whilst there are still many occasions when you would still need a fully-fledged data warehouse, the transformational capability of Power BI moves the goalposts somewhat, especially for those companies that don’t have the will to start down the data warehouse route.

In the simplest of terms, data modelling is the art of transforming data to enable an easier process of gaining insight from your analytics. When we think of a database that sits behind an application, we see a complex schema that is designed for discrete reads and updates of small pieces of information. Whilst that is fine for the day-to-day running of that system, when it comes to gaining insight on that data such as trend over time, the volume of data being read for a single query is much larger – both in terms of the number of rows being read, but also how wide you are reading data across the database. With this very different data need, you couldn’t realistically rely on data being in one structure to suit the needs of the other. So when we talk about data modelling, we are talking about making the effort of producing analytics much more efficient from a technical standpoint, as well as one of human understanding. When you model the data for more efficient reporting, you are also making it easier for non-technical people to interact with it. Data modelling is, therefore, the art of making data make more sense.

At this point, it would be remiss to talk about data modelling without mentioning the Kimball Methodology – this looks at data modelling in the terms of Fact and Dimension tables (hence the phrase ‘Dimensional Modelling’). Here, we have a central Fact table and surrounding Dimension tables. The Fact describes an event that has taken place, such as a retailer making a sale. The Dimension tables describe the event that has taken place – when the sale happened, what was sold, who bought it and so on. There is a lot more to it than that, but in well-modelled data, you should have a central Fact table and outlying Dimension tables, diagrammatically arranged in a star. Crucially, in the Kimball Methodology you can add more Fact tables as you go along, each one referencing different Dimensions that are usually related to other Fact tables. Again using the retail example, you may have a Product Dimension table that joins with Sales and Stock Fact tables. By modelling the data in this way, we are looking at making it easier to join more pieces of data together, possibly from different sources, via each of these common Dimensions.

Going back to the first of the questions asked at the start of this blog, why would you model data for a wider business need than the report you happen to be building?

There are many benefits in modelling the data for reports and report writers – whilst it may be a slightly longer design and first phase of development, subsequent data modelling exercises are quicker with dimensions already modelled from earlier phases. This in turn means that you have a good chance of the current development supporting future reporting needs, even if they haven’t been directly planned in the data model. You will also find that non-technical people can more easily understand the data model, allowing them to do more with the data – this often means not having technically skilled people being a bottleneck for report development, so more people are doing more with the data. This also means the DAX calculations in Power BI reports are usually much easier to produce and for other people to understand – this is a very important point that is often overlooked during development; aren’t we supposed to be finding these tools easier to use for our end business users? Modelling the data instead of loading the effort in complex DAX means we are allowing more usability for the end users, we are also making the report interactions much more efficient – the DAX that is run with every visual interaction happens much more quickly, making for a better consumer experience.

The second question asked at the top of the blog was about the pros and cons of modelling the data, and which method outweighs the other.

The Pros of modelling data in Power BI:

- Data is modelled to allow more people to understand it and therefore you have more people self-servicing their own report development from the modelled data. You have a semantic model that can satisfy multiple reporting needs, making it far more useable and offering a greater return on development costs.

- Report interactions are much quicker, with all the effort being moved from run-time DAX calculations to the point the semantic model is refreshed, such as overnight.

- Report maintenance is arguably much easier, with less head scratching over someone else’s impressive, but difficult to understand DAX formulas.

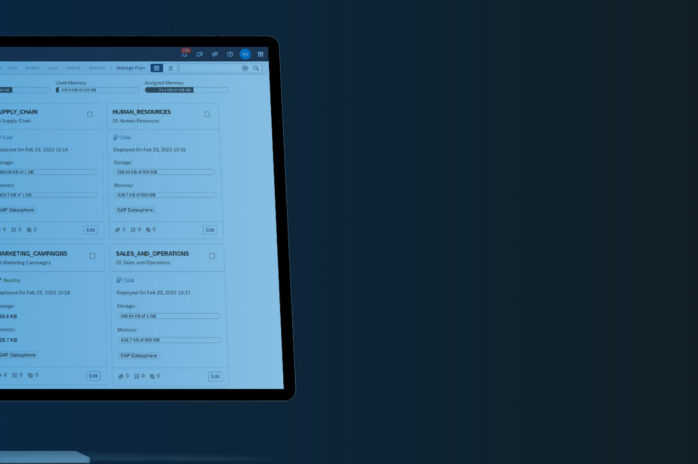

- You end up with fewer variations of the same data hanging around your Power BI Service, which can be the tipping point to a more expensive Power BI licensing model (or result in lots of tricky model owner administration in the service). This can be further mitigated by the Microsoft Fabric flavour of Power BI, which only keeps a single version of the data, irrespective of how many semantic models use it.

The Cons of modelling data in Power BI:

- Producing the semantic model requires a little more thought in the design process, and a slightly longer development phase at first. This is outweighed by the benefits of later development phases already having Dimensional data modelled in the service – this can make those later phases much quicker.

- Data modelled in this way can take up slightly more space. This is also outweighed by the benefits of being able to link new Facts into Dimensions that already exist – you aren’t repetitively storing the same descriptive data in multiple tables. It is also less of an issue with the way Power BI stores data, opting for columnar storage that strips out duplicates, instead of a traditional row storage you find in database tables.

- There is more information out there on writing DAX to get round a problem than using data modelling to get round that same problem. This is true, but the once you get to grips with the principles of data modelling, it gets much easier and you will find you can avoid some pretty tricky DAX calculations if you improve your data modelling behaviours.

When weighing up the pros and cons of data modelling, it should be quite clear to see there are many reasons why the data should be modelled following some of the principles set out in the long-established Kimball Methodology. Whilst the methodology does have some more complex principles you wouldn’t necessarily entertain in Power BI, getting the data structure right is a principle that should be followed as a routine behaviour.

Written by Mike Hobson, Pre-Sales Consultant, Codestone

Contact enquiries@codestone.net to talk to our experts.

We should be talking.

It will be worth it.

Or let’s talk about how we can help you now.